Servers in stock

Checking availability...

Thanks to their low-latency performance, TPUs are well-suited for real-time applications like recommendation engines and fraud detection.

TPUs are engineered to efficiently train complex models such as GPT-4 and BERT, significantly lowering both training time and computational costs.

From climate models to protein simulations, TPUs empower researchers with the speed and power needed for breakthrough discoveries.

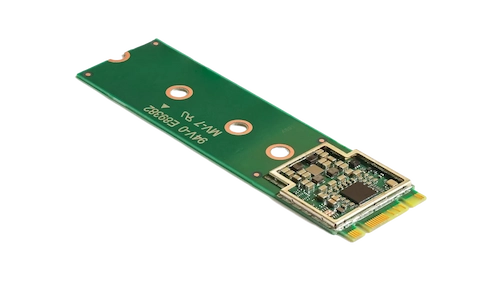

The Coral M.2 Accelerator boosts on-device machine learning by delivering fast inference with minimal power usage. Integrating it into your system enables efficient, real-time ML processing at the edge, reducing both latency and dependence on cloud resources.

The Hailo-8 edge AI processor delivers up to 26 TOPS in an ultra-compact package—smaller than a penny, memory included. Its neural network-optimized architecture enables real-time deep learning on edge devices with low power draw, making it ideal for automotive, smart city, and industrial automation applications. This efficient design supports high-performance AI at the edge while minimizing energy usage and overall costs.

Built to excel at matrix-heavy tasks, TPUs provide accelerated training and inference speeds compared to traditional GPUs.

Allows training to be distributed across multiple units, enabling efficient scalability for large-scale models.

Offers support for popular ML frameworks such as TensorFlow, PyTorch (through OpenXLA), and JAX, ensuring effortless integration with your existing processes.

Integrated with Google Kubernetes Engine (GKE) and Vertex AI, TPUs provide easy orchestration and management of AI workloads.