Servers in stock

Checking availability...

Dzięki niskim opóźnieniom procesory TPU doskonale nadają się do zastosowań w czasie rzeczywistym, takich jak silniki rekomendacji i wykrywanie oszustw.

Układy TPU zaprojektowano z myślą o efektywnym szkoleniu złożonych modeli, takich jak GPT-4 i BERT, co znacznie skraca czas szkolenia i obniża koszty obliczeniowe.

Od modeli klimatycznych po symulacje białek – TPU zapewniają naukowcom szybkość i moc niezbędne do dokonywania przełomowych odkryć.

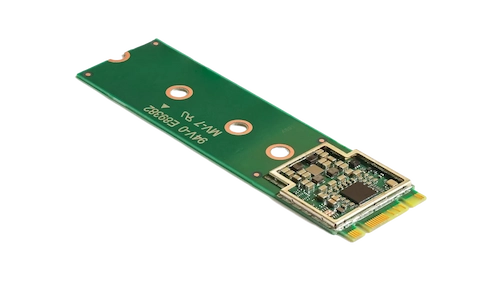

Akcelerator Coral M.2 przyspiesza uczenie maszynowe na urządzeniu, zapewniając szybkie wnioskowanie przy minimalnym zużyciu energii. Zintegrowanie go z systemem umożliwia wydajne przetwarzanie uczenia maszynowego w czasie rzeczywistym na brzegu sieci, redukując opóźnienia i zależność od zasobów chmurowych.

Procesor Hailo-8 Edge AI oferuje do 26 TOPS w ultrakompaktowej obudowie – mniejszej niż grosz, wliczając pamięć. Jego architektura zoptymalizowana pod kątem sieci neuronowej umożliwia głębokie uczenie w czasie rzeczywistym na urządzeniach brzegowych o niskim poborze mocy, co czyni go idealnym rozwiązaniem do zastosowań w motoryzacji, inteligentnych miastach i automatyce przemysłowej. Ta wydajna konstrukcja obsługuje wysokowydajną sztuczną inteligencję na brzegu sieci, minimalizując jednocześnie zużycie energii i koszty ogólne.

Zaprojektowane do zadań wymagających dużej ilości macierzy, układy TPU zapewniają szybszą prędkość uczenia i wnioskowania w porównaniu do tradycyjnych układów GPU.

Umożliwia rozłożenie szkolenia na wiele jednostek, zapewniając efektywną skalowalność modeli na dużą skalę.

Oferuje wsparcie dla popularnych struktur uczenia maszynowego, takich jak TensorFlow, PyTorch (poprzez OpenXLA) i JAX, gwarantując bezproblemową integrację z istniejącymi procesami.

Zintegrowane z Google Kubernetes Engine (GKE) i Vertex AI jednostki TPU umożliwiają łatwą koordynację i zarządzanie obciążeniami AI.